HortonWorks Reaches Out to SAS and Storm

Hortonworks this week revealed a new partnership with SAS that will enable the analytics giant to use its tools to analyze data stored in Hortonworks’ Hadoop distribution. It also announced plans to integrate the Apache Storm stream processing engine into its distribution, and to ship a preview by the end of the year.

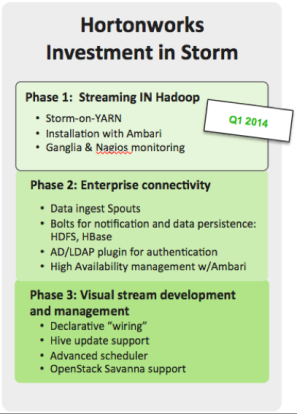

In a blog post on Tuesday, Hortonworks vice president of products Bob Page discussed the company’s plans around Storm and stream processing. “We are pleased to announce that we have initiated an engineering commitment to deeply integrate Storm with Hadoop, specifically as a supported component of the 100 percent open source Hortonworks Data Platform.”

In a blog post on Tuesday, Hortonworks vice president of products Bob Page discussed the company’s plans around Storm and stream processing. “We are pleased to announce that we have initiated an engineering commitment to deeply integrate Storm with Hadoop, specifically as a supported component of the 100 percent open source Hortonworks Data Platform.”

With Hadoop version 2 now available, organizations using the big data framework are expected to branch out beyond batch-oriented MapReduce jobs and begin running more real-time jobs on their Hadoop clusters. For many, that means using Storm, which was originally developed by Twitter and is currently a Hadoop incubator project at the Apache Software Foundation.

Storm will likely make its way into the Hadoop framework soon. Even though Storm isn’t a part of the recently released Hadoop version 2, it can still take advantage of YARN’s capability to dole out and keep track of computing resources and permissions in a Hadoop cluster.

Some Hadoop distributors, like Hortonworks, are not waiting around for Storm to become an official component of Hadoop. As Page notes in his blog, Yahoo has already done a lot of work integrating Storm with YARN, and has released its work under the Apache license. Hortonworks is looking to build upon this and to help its customers use Storm within Hadoop clusters.

The reason for this is simple: batch processes are just too slow for the types of big data applications that Hortonworks’ customers want to build. “One of the most common use cases that we see emerging from our customers is the antithesis of batch: stream processing in Hadoop,” Page writes. “Early adopters are using stream processing to analyze some of the most common new types of data such as sensor and machine data in real time.”

The capability to have Storm and MapReduce work on the same set of data in a Hadoop cluster opens up all kinds of possibilities. It enables organizations to use Storm for low-latency processing of real-time data, and use MapReduce for deeper probing into the data. By putting them next to each other on the same cluster, it eliminates the need to move the data back and forth across the network. That not only saves time and money, but it enables a whole new class of applications.

For example, Page relates the work done by the UC Irvine Medical Center, which recently launched a new technology called SensiumVitals to monitor and transmit patient vital signs every minute. According to Page, the system takes 4,320 snapshots of each patient’s vital signs every day. Algorithms then run atop this streaming data to determine what the snapshots mean, and whether the patient is getting healthier, sicker, or somewhere in between.

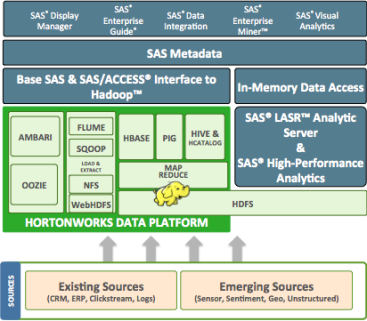

The partnership between Hortonworks and SAS follows a similar minimize-the-data-movement theme. Namely, by giving SAS hooks into HDP, Hortonworks is giving customers another way to analyze data stored in Hadoop.

Specifically, the partnership is designed to make it easy to run SAS tools, such as Visual Analytics, High-Performance Analytics Server, and LASR Analytic Server, on top of the Hortonworks Hadoop cluster. These tools will get at the HDP data through the SAS/ACCESS Interface to Hadoop layer. SAS will also maintain its own metadata layer within the Hadoop environment.

SAS says its tools will complement existing Hadoop analytic engines, such as MapReduce, Pig, and Hive, and give customers access new data sources “that previously could not be captured and analyzed, such as social media, clickstream, IT server log, machine and sensor, and geo-location data.” In addition to standard analytic applications (i.e. customer churn, etc.) the goal is to use this technology to drive insights into the areas of metadata management, data lineage, and security.

SAS says its tools will complement existing Hadoop analytic engines, such as MapReduce, Pig, and Hive, and give customers access new data sources “that previously could not be captured and analyzed, such as social media, clickstream, IT server log, machine and sensor, and geo-location data.” In addition to standard analytic applications (i.e. customer churn, etc.) the goal is to use this technology to drive insights into the areas of metadata management, data lineage, and security.

Randy Guard, SAS vice president of product management, says the partnership will help data scientists and business analysts explore, visualize, and analyze big data stored in Hadoop. “SAS provides domain-specific analytics and data management capabilities natively on Hadoop to reduce data movement and take advantage of Hadoop’s distributed computational power,” he says in a press release. “Use of SAS analytics software with Hortonworks Data Platform will help businesses quickly discover and capitalize on new business insights from their Hadoop-based data.”

Related Items:

Hadoop Version 2: One Step Closer to the Big Data Goal

Please Stop Chasing Yellow Elephants, TIBCO CTO Pleads

Hortonworks Updates Hadoop on Windows